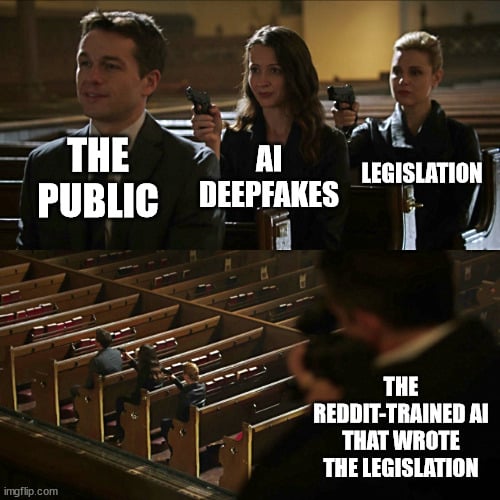

Shall we trust LM defining legal definitions, deepfake in this case? It seems the state rep. is unable to proof read the model output as he is “really struggling with the technical aspects of how to define what a deepfake was.”

These types of things are exactly what Generative AI models are good for, as much as Internet people don’t want to hear it.

Things that are massively repeatable based off previous versions (like legislation, contracts, etc) are pretty much perfect for it. These are just tools for already competent people. So in theory you have GenAI crank out the boring stuff and have an expert “fill in the blanks” so to speak

Ideally it would be a generative AI trained specifically on legal textbooks.

I don’t know why there seem to be no LLMs trained specifically on expert subject matter.

There are, just not available publicly. Tons of enterprises (law firms included) are paying to have models trained on their data

There are, just not available publicly.

I meant publicly available

I understand the irony. But can we not pretend they blindly used an output or even generated a full page. It was a specific section to provide a technical definition of “what is a deepfake”.

“I was really struggling with the technical aspects of how to define what a deepfake was. So I thought to myself, ‘Well, why not ask the subject matter expert (i do not agree with that wording, lol) , ChatGPT?’” Kolodin said.

The legislator from Maricopa County said he “uploaded the draft of the bill that I was working on and said, you know, please, please put a subparagraph in with that definition, and it spit out a subparagraph of that definition.”

“There’s also a robust process in the Legislature,” Kolodin continued. “If ChatGPT had effed up some of the language or did something that would have been harmful, I would have spotted it, one of the 10 stakeholder groups that worked on or looked at this bill, the ACLU would have spotted, the broadcasters association would have spotted it, it would have got brought out in committee testimony.”

But Kolodin said that portion of the bill fared better than other parts that were written by humans. “In fact, the portion of the bill that ChatGPT wrote was probably one of the least amended portions,” he said.

I do not agree on his statement that any mistakes made by ai could also be made by humans. The reasoning and errors in reasoning is quite different in my experience but the way chatgpt was used is absolutely fair.

No kidding. When I read that, my first thought was, “He’s clearly at least above the median intelligence of his fellow Arizona GOP reps, if not in the top 10% of their entire conference”

Anyone who read the article AND has experience with the Arizona GOP, probably thought the same thing.

The Arizona GOP collects some of the dumbest people alive.

The Speznasz.

Little pig boy comes from the dirt.

Someone should run all lawyer books through Chat-GPT so we can have a free opensource lawyer in our phones.

During a traffic stop: “Hold on officer, I gotta ask my lawyer. It says to shut the hell up.”

Cop still shoots him in the head so he can learn his lesson. He pulled out his phone!

Honestly I think this is the inevitable future. There are lots of jobs where what you’re paying for is the knowledge. And while LLMs likely won’t be as good as an actual expert, most “professionals”, in my experience, both in personal professional work, as well as contracting “professional” work, are not even remotely experts, and a properly-trained LLM will run circles around them.

You won’t be able to buy them, because machines are, for some reason, not allowed to be fallible like humans, but I can certainly see a scenario where someone takes an open-source LLM and trains it with professional materials (obtained both legally and illegally) and releases it for free, and it does a better job than 70% of “professionals”.

🙊 and the group think nonsense continues…

Y’all know those grammar checking thingies? Yeah, same basic thing. You know when you’re stuck writing something and your wording isn’t quite what you’d like? Maybe you ask another person for ideas; same thing.

Is it smart to ask AI to write something outright; about as smart as asking a random person on the street to do the same. Is it smart to use proprietary AI that has ulterior political motives; things might leak, like this, by proxy. Is it smart for people to ask others to proof read their work? Does it matter if that person is a grammar checker that makes suggestions for alternate wording and has most accessible human written language at its disposal.

spoiler

asdfasfasfasfas