Yeah that’s pretty bad. We all know you can bait LLMs to spit out some evil stuff, but that they do it on their own is scary.

Yeah that’s pretty bad. We all know you can bait LLMs to spit out some evil stuff, but that they do it on their own is scary.

Younger than 30yo the technical knowledge declines rapidly, so it’s very unlikely for them to be here.

Not just right wing voices emerge, democratic people now see it’s pointless to argue. Which is the much bigger damage. Imagine what happens when people no longer fight back. We live in post-factual times, it’s over.

For security updates in critical infrastructure, no. You want that right away, in best case instant. You can’t risk a zero day being used to kill people.

Yeah, if you have that you can get parallel uploads and streams. Also you get tutorials and pretty much all content.

It’s also not like as if I care. In case of total collapse and me being hungry, I’ll just take the food regardless. Cash is pointless as we’ve already moved digital, even in a cash country like mine.

All fucking idiots.

Into the Breach.

Better call saul

Thanks, I hope I remember this if this ever is relevant to me.

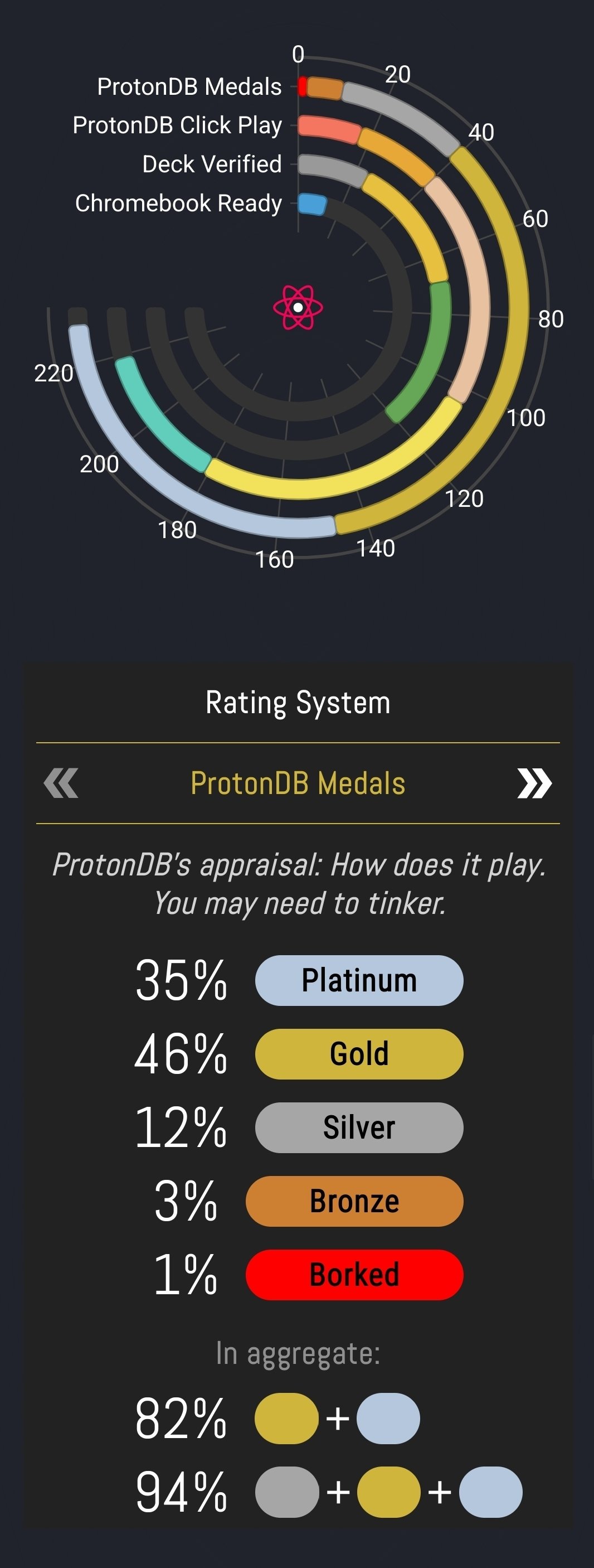

Pretty good. Only two multiplayer games in the silver area that I might play once every 3-5 years. The rest I don’t care about. But overall very good if one doesn’t care about multiplayer games.

Recommendations is just an euphemism marketing joke. Every serious journalist would call them what they are, ads.

Google ruined their search and is now onwards to the other remaining working products. They are so dumb.

Where’s the “If AI destroys humanity, we deserved it”?

YouTube shorts are already a thing.

I too miss my build in IR blaster from my S3.

It’s a perfect replacement. I use it for months now. I won’t look back at gboard. There’s an option for everything. Even multiple languages work if you set it up correctly.

Yes, there is a degeneration of replies, the longer a conversation goes. Maybe this student kind of hit the jackpot by triggering a fiction writer reply inside the dataset. It is reproducible in a similar way as the student did, by asking many questions and at a certain point you’ll notice that even simple facts get wrong. I personally have observed this with chatgpt multiple times. It’s easier to trigger by using multiple similar but non related questions, as if the AI tries to push the wider context and chat history into the same LLM training “paths” but burns them out, blocks them that way and then tries to find a different direction, similar to the path electricity from a lightning strike can take.