I am a fairly radical leftist and a pacifist and you wouldn’t believe the amount of hoo-ra military, toxic masculinity, explosions and people dying, gun-lover bullshit the YouTube algorithm has attempted to force down my throat in the past year. I block every single channel that they recommend yet I am still inundated.

Now, with shorts, it’s like they reset their whole algorithm entirely and put it into sensationalist overdrive to compete with TikTok.

I am a fairly radical leftist and a pacifist and you wouldn’t believe the amount of hoo-ra military, toxic masculinity, explosions and people dying, gun-lover bullshit the YouTube algorithm has attempted to force down my throat in the past year. I block every single channel that they recommend yet I am still inundated.

I really want to know why their algorithm varies so wildly from person to person, this isn’t the first time I’ve seen people say this about YT.

But in comparison, their algorithm seems to be fairly good in recommending what I’m actually interested in and none of all that other crap people always say. And when it does recommend something I’m not interested in, it’s usually something benign, like a video on knitting or something.

None of this out of nowhere far right BS gets pushed to me and a lot of it I can tell why it’s recommending me it.

For example my feed is starting to show some lawn care/landscaping videos and I know it’s likely related to the fact I was looking up videos on how to restring my weed trimmer.

I think it depends on the things you watch. For example, if you watch a lot of counter-apologetics targeted towards Christianity, YouTube will eventually try out sending you pro-Christian apologetics videos. Similarly, if you watch a lot of anti-Conservative commentary, YouTube will try sending you Conservative crap, because they’re adjacent and share that “Conservative” thread.

Additionally, if you click on those videos and add a negative comment, the algorithm just knows you engaged with it, and it will then flood your feed with more.

It doesn’t care what your core interests are, it just aims for increasing your engagement by any means necessary.

Maybe it depends on what you watch. I use Youtube for music (only things that I search for) and sometimes live streams of an owl nest or something like that.

If I stick to that, the recommendations are sort of OK. Usually stuff I watched before. Little to no clickbait or random topics.

I clicked on one reaction video to a song I listened to just to see what would happen. The recommendations turned into like 90% reaction videos, plus a bunch of topics I’ve never shown any interest in. U.S. politics, the death penalty in Japan, gaming, Brexit, some Christian hymns, and brand new videos on random topics.

Same here. I’ve never watched anything like that, yet my recommendations are filled with far-right grifters, interspersed with tech and cooking videos that are more my jam.

YouTube seems to think I love Farage, US gun nuts, US free speech (to be racist) people, anti-LGBT (especially T) channels.

I keep saying I’m not interested, yet they keep trying to convert me. Like fuck off YouTube, no I don’t want to see Jordan Peterson completely OWNS female liberal using FACTS and LOGIC

Don’t know about youtube, but I have a similar experience at twitter. I believe they probably see blocking, muting or reporting as “interaction” and show more of the same as a result.

On youtube on the other hand, I never blocked a channel and almost never see militaristic or right wing stuff (despite following some gun nerds, because I think they are funny).

I gave up and used an extension to remove video recommendations, blocked shorts and auto redirect me to my subscriptions list away from the home page. It’s a lot more pleasant to use now.

The algorithm is just garbage at this point. I ultimately just watch YouTube exclusively through Invidious at this point, can’t imagine going back at this stage.

Shorts are pretty annoying in general, but I’ll be damned if they’re not great for presenting a recipe in a very short amount of time. Like, you don’t need to see the chef dicing four whole onions in real time.

I totally agree with you! I identify as a ‘progressive liberal’ and had to click on ‘Don’t show me ad by this advertiser’ multiple times yet I was pushed ads by the far right on YouTube and other websites (Google Ads) until the day of the election. A lot of those ads involved hate speech, blatant lies, and were just full of propaganda. It’s a shame that these companies are able to force things down our throats and we’re not able to do much about it. And I’m someone who wouldn’t mind paying for something like ‘YouTube Premium’ to get rid of those ads but don’t do that out of principle because the thought of supporting a company that’s propagating all this disgusting stuff just doesn’t sit right with me.

It’s atleast good that my YouTube algorithm otherwise is dialed in that I don’t get video recommendations of the same disgusting things. Just the ads. And while people here might like to reply that ads also follow their own algorithm - the far right political parties in question had way too much money to blow to target the entire population than just a particular demographic(the right leaning or neutrals out there). On clicking ‘Why am I seeing this advert’ I was always met with ‘Your Location’ as the reason.

Dunno what you watch but yt may thinks the videos are about similar topics (even if you think otherwise).

For myself I usually get recommended what I already watch: Tech, vtuber/anime, mechanical engineering, oddities (like Weird Explorer, Technology Connections).

I rarely get stuff outside of the bubble like gun videos (some creator recently modified a glock in the design of milwaukee tools), meme channels etc.

I highly suspect the videos you watch and interact heavily may feed back to yt in a different way than you think.

And remember: Negative feelings provoke interactions and increase the session time which is a plus for them.

I am a fairly radical leftist and a pacifist and you wouldn’t believe the amount of hoo-ra military, toxic masculinity, explosions and people dying, gun-lover bullshit the YouTube algorithm has attempted to force down my throat in the past year. I block every single channel that they recommend yet I am still inundated.

Now, with shorts, it’s like they reset their whole algorithm entirely and put it into sensationalist overdrive to compete with TikTok.

I really want to know why their algorithm varies so wildly from person to person, this isn’t the first time I’ve seen people say this about YT.

But in comparison, their algorithm seems to be fairly good in recommending what I’m actually interested in and none of all that other crap people always say. And when it does recommend something I’m not interested in, it’s usually something benign, like a video on knitting or something.

None of this out of nowhere far right BS gets pushed to me and a lot of it I can tell why it’s recommending me it.

For example my feed is starting to show some lawn care/landscaping videos and I know it’s likely related to the fact I was looking up videos on how to restring my weed trimmer.

I think it depends on the things you watch. For example, if you watch a lot of counter-apologetics targeted towards Christianity, YouTube will eventually try out sending you pro-Christian apologetics videos. Similarly, if you watch a lot of anti-Conservative commentary, YouTube will try sending you Conservative crap, because they’re adjacent and share that “Conservative” thread.

Additionally, if you click on those videos and add a negative comment, the algorithm just knows you engaged with it, and it will then flood your feed with more.

It doesn’t care what your core interests are, it just aims for increasing your engagement by any means necessary.

Maybe it depends on what you watch. I use Youtube for music (only things that I search for) and sometimes live streams of an owl nest or something like that.

If I stick to that, the recommendations are sort of OK. Usually stuff I watched before. Little to no clickbait or random topics.

I clicked on one reaction video to a song I listened to just to see what would happen. The recommendations turned into like 90% reaction videos, plus a bunch of topics I’ve never shown any interest in. U.S. politics, the death penalty in Japan, gaming, Brexit, some Christian hymns, and brand new videos on random topics.

Same here. I’ve never watched anything like that, yet my recommendations are filled with far-right grifters, interspersed with tech and cooking videos that are more my jam.

YouTube seems to think I love Farage, US gun nuts, US free speech (to be racist) people, anti-LGBT (especially T) channels.

I keep saying I’m not interested, yet they keep trying to convert me. Like fuck off YouTube, no I don’t want to see Jordan Peterson completely OWNS female liberal using FACTS and LOGIC

Don’t know about youtube, but I have a similar experience at twitter. I believe they probably see blocking, muting or reporting as “interaction” and show more of the same as a result.

On youtube on the other hand, I never blocked a channel and almost never see militaristic or right wing stuff (despite following some gun nerds, because I think they are funny).

Sane here. I will just skip over them. At most I will block a video I actually already watched on another platform like Patreon.

That does nothing for what’s recommended.

‘Not Interested’ -> 'Tell us why -> ‘I don’t like the video’ is what works.

I gave up and used an extension to remove video recommendations, blocked shorts and auto redirect me to my subscriptions list away from the home page. It’s a lot more pleasant to use now.

Same here. No matter how many you block, or mark not interested, it just doesn’t stop

The algorithm is just garbage at this point. I ultimately just watch YouTube exclusively through Invidious at this point, can’t imagine going back at this stage.

My shorts are full of cooking channels, “fun fact” spiky hair guy, Greenland lady, Michael from vsauce and aviation content. Solid 6.5/10

Shorts are pretty annoying in general, but I’ll be damned if they’re not great for presenting a recipe in a very short amount of time. Like, you don’t need to see the chef dicing four whole onions in real time.

I get all cooking, history, and movie reviews: Max Miller, Red Letter Media, Behind the Bastards, and four-hour history/archaeology documentaries

I totally agree with you! I identify as a ‘progressive liberal’ and had to click on ‘Don’t show me ad by this advertiser’ multiple times yet I was pushed ads by the far right on YouTube and other websites (Google Ads) until the day of the election. A lot of those ads involved hate speech, blatant lies, and were just full of propaganda. It’s a shame that these companies are able to force things down our throats and we’re not able to do much about it. And I’m someone who wouldn’t mind paying for something like ‘YouTube Premium’ to get rid of those ads but don’t do that out of principle because the thought of supporting a company that’s propagating all this disgusting stuff just doesn’t sit right with me.

It’s atleast good that my YouTube algorithm otherwise is dialed in that I don’t get video recommendations of the same disgusting things. Just the ads. And while people here might like to reply that ads also follow their own algorithm - the far right political parties in question had way too much money to blow to target the entire population than just a particular demographic(the right leaning or neutrals out there). On clicking ‘Why am I seeing this advert’ I was always met with ‘Your Location’ as the reason.

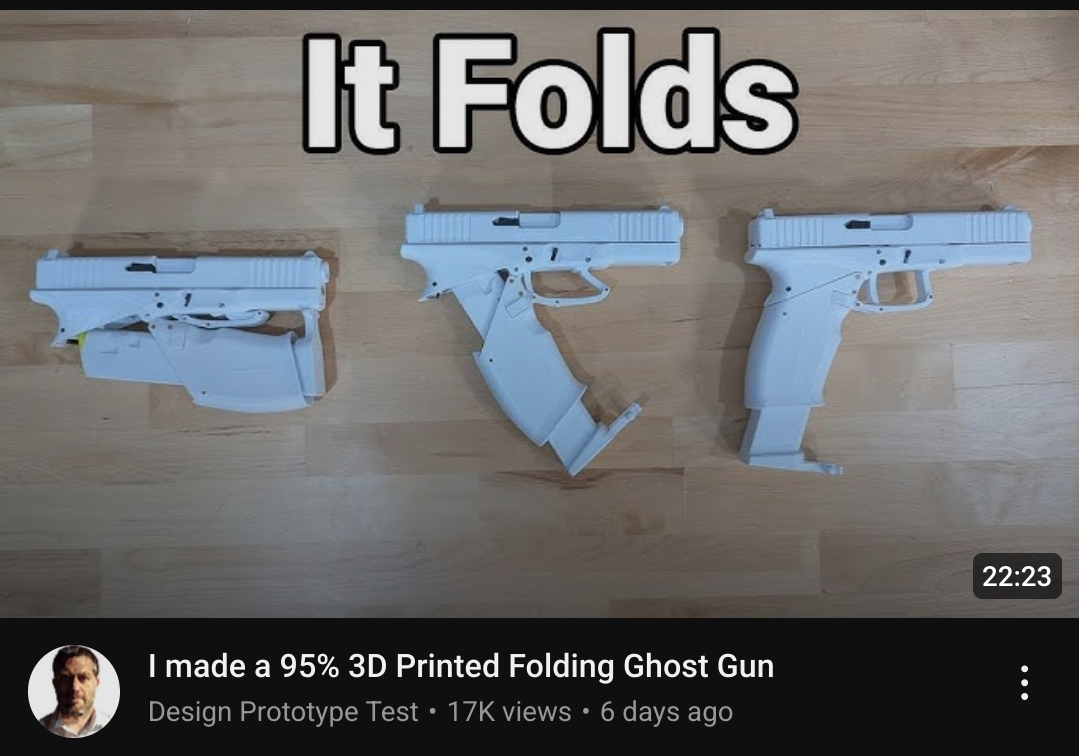

Saw crazy YouTube recommendations:

Dunno what you watch but yt may thinks the videos are about similar topics (even if you think otherwise).

For myself I usually get recommended what I already watch: Tech, vtuber/anime, mechanical engineering, oddities (like Weird Explorer, Technology Connections).

I rarely get stuff outside of the bubble like gun videos (some creator recently modified a glock in the design of milwaukee tools), meme channels etc.

I highly suspect the videos you watch and interact heavily may feed back to yt in a different way than you think.

And remember: Negative feelings provoke interactions and increase the session time which is a plus for them.